|

Ryan Friberg

I am a computer scientist with a broad range of interests mainly

focused on machine learning (specifically computer vision and natural

language processing), robotics, and computer graphics. Outside of

computer science, I am an avid runner and committed language learner,

actively studying four foreign languages at an intermediate to

advanced level.

|

|

Current Ongoing Projects:

Custom GPU Game/World Engine

I am currently building a new custom real-time GPU game

engine from scratch in C++ with planned support for

rendering, physics, and an entity-component system. This

project currently uses OpenGL but has plans to expand to

Metal and Vulkan in the future.

Previous Projects:

Visual Guidance for Infant Lumbar Puncture Training in XR

Published in IEEE in partnership with Columbia University's

School of Medicine, this is an educational system that leverages

XR features to give medical students more tangible practice

at pediatric lumbar punctures before needing to perform on a

patient. This tool used visual feedback and "gamified" the

procedure to better teach students while encouraging them to

continue practicing and improve their technique.

(Unity, C#), IEEE paper

GalaxIDNet: Context-based Image Retrieval

A context-based image retrieval pipeline built on a custom ViT

implemenation and trained on the Galaxy10 DECaLS dataset. This

system queries a database using learned visual features only,

and returns the top-k most similar results as determined by a

custom similarity scoring metric. The purpose of this tool was

to allow for an astrophysics researcher to be able to pool

examples of visually similar galaxies to facilitate the study

of galactic evolution and cosmology.

code (torch), paper (unsubmitted/unpublished)

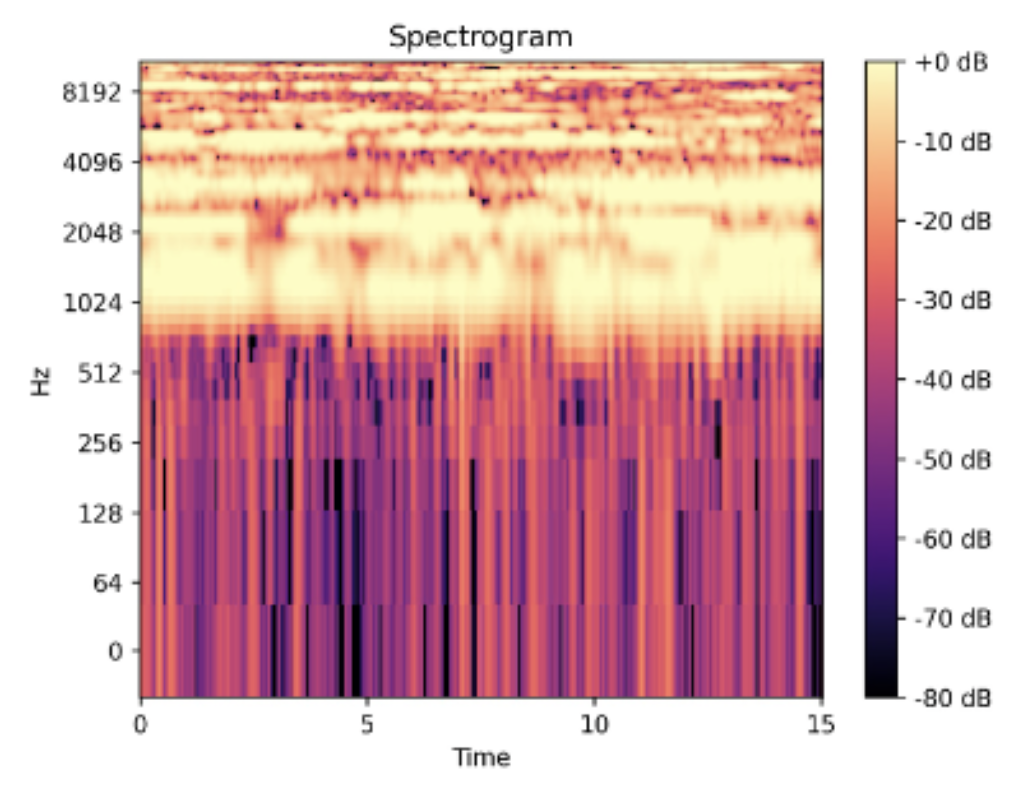

Image-to-Music: The "Hans Zimmer" Model

A deep learning pipeline that consisted of finetuning a ViT

on scraped images of diverse scenes that depict certain emotions

(calm, gloomy, happy, etc.) and leveraged the search queries

to assign the metadata/labels for any given image. The pipeline

scraped YouTube for audio depicting the same set of emotions,

using the emotional categories as the method of mapping from

one modality to the other. The audio was clipped into small

chunks and converted to spectrogram image data. The final part

of the pipeline was fine-tuning Stable Diffusion to directly

generate spectrogram images given a sequence of unique tokens

(keywords/emotions) generated by the first model.

code (torch, huggingface), paper (unsubmitted/unpublished)

Recreation of End-to-End Robotics Perception and Motion Planning Systems

A robotics system for environment perception as well as path

planning and control. For perception, it used a U-Net semantic

segmentation network for detection of objects in the robot's

pick-and-place task environment. This stage of the pipeline

leveraged algorithms such as ICP to perform pose estimation,

and had implementations of both visual affordance and action

regression grasp prediction systems. The path planning and

obstacle avoidance functionality was built off of the RTT

algorithm.

(pybullet, torch), no associated paper

Context-Limited Optical Character Recognition

Custom resnet architecture trained on image data containing

individual letters (both upper and lowercase) and numerical

digits. The main research question was, using a large

conglomerate custom dataset consisting of a visually diverse,

but strictly binary images, determining if a neural network

could still extrapolate text information from raw images

containing text characters in any visual context. The

pipeline used Google's Pytesseract for object detection and

the custom network for recognition.

code (torch), paper (unsubmitted/unpublished)

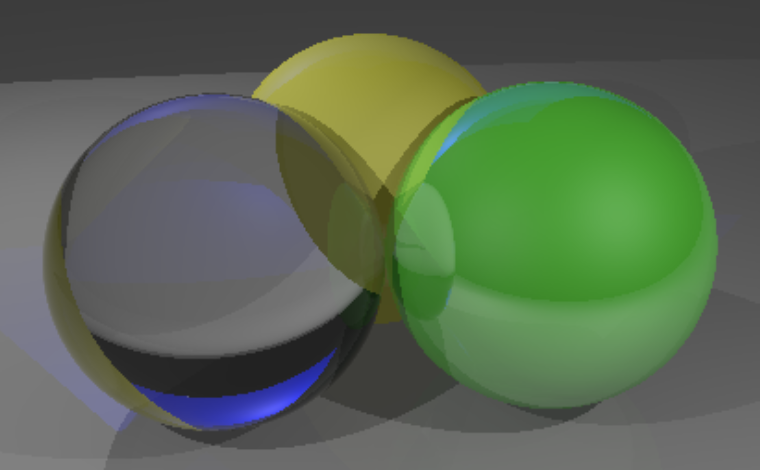

Ray Tracing Platform

A ray tracing platform with implementation of various

components. This includes materials (such as Blinn-Phong or

glass), illumination, shadows, reflections, mesh support,

text mapping, and optimizations such as the utilization of

bounding volume hierarchies. Images are generated by firing

simulated rays through each pixel of the screen and computing

all of each rays' interaction with the scene and calculate

what the resulting color of each pixel is. Instead of

real-time rendering, this engine's goal was pre-rendering

one image at a time but to a high degree of realism.

(C++), no associated paper

Resource Restricted Deep Reinforcement Learning

A deep-Q reinforcement learning network using experience

replay on a state-space restricted memory bank. This project

experimented with various reward systems and training

configurations with the goal of demonstrating that imposing

optimal restrictions on the network (to avoid spending time

training on superfluous actions or game-state information)

can allow reinforcement learning to improve gameplay even in

heavily resource restricted environments on reasonable

timescales (hours on a single GPU). The model was trained on

Frogger for the Atari 2600, Super Mario Bros. for the NES,

and Flappy bird fom iOS using OpenAI Gym, with demonstrable

gameplay improvements in each over the course of training.

code (torch), paper (unsubmitted/unpublished)

Contextualized Medication Event Extraction

A deep-learning NLP pipeline in accordance with the Havard

Medicine National NLP Clinal Challenge (unsubmitted to the

competition due to logistical reasons). Many transformer-based

large language models (BERT, DistilBERT, GPT-2, etc.) were

deployed and compared on the task of processing and extracting

relevant medical information from raw physican note text

data. The implementation achieved success in areas such as

named entity recognition (NER) and various contexts in which

diagnoses were given.

code (torch, huggingface), paper (unsubmitted/unpublished)

Misc. Smaller Machine Learning Projects

In addition to the projects listed above, I have additional

experience with many other smaller machine learning projects.

Examples that fall into this category include the implementation

of specific network architectures from scratch (LSTMs, ViT,

etc.) among others. Please find a few examples below:

code (torch) Vision models

code (torch, lightning) Language models